Chicago Music Compass

Author: Jaime Garcia DiazMay 2024

Intro

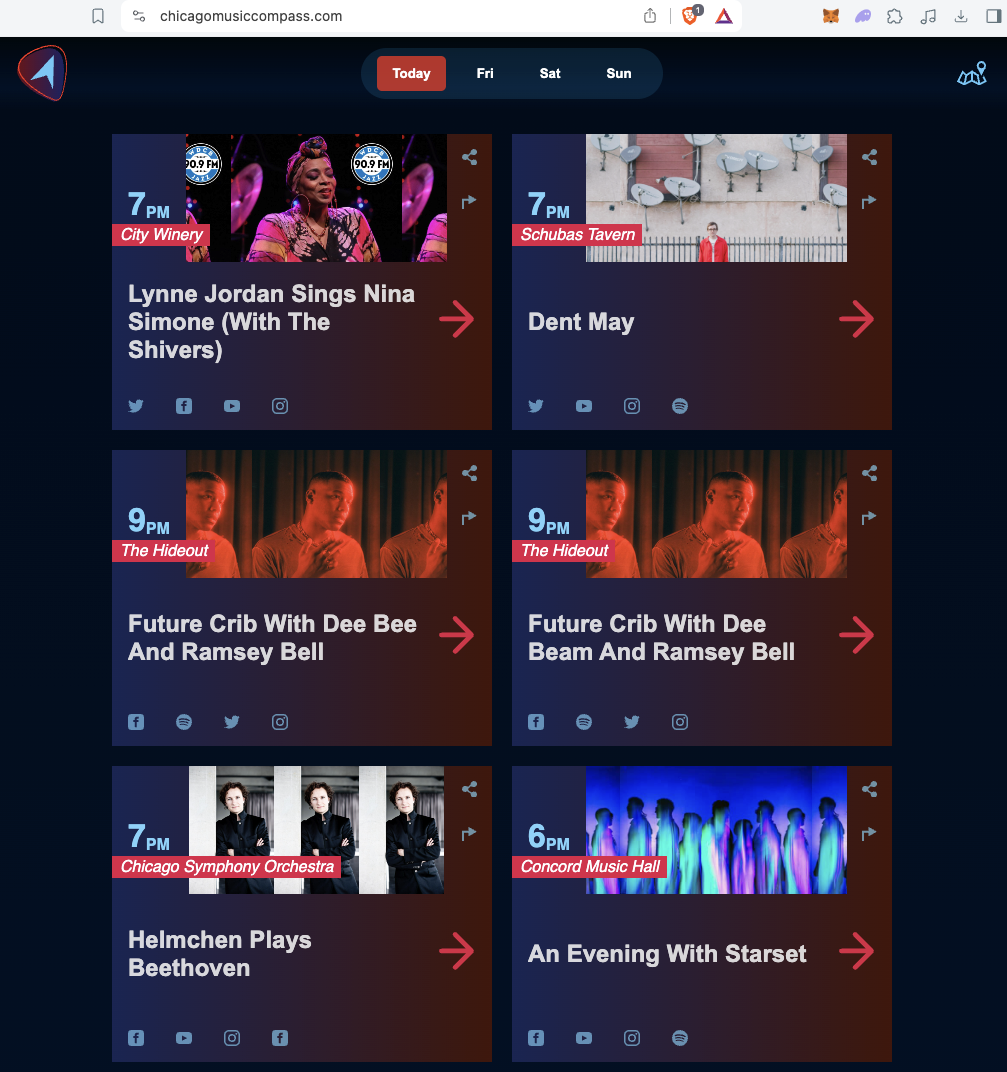

The goal is to provide a quick overview of music events happening in Chicago in the coming days.

Many aggregator websites effectively display calendars of events, spanning not only the near future but also months and even years ahead. However, Chicago Music Compass doesn't aim to compete with such aggregators. Instead, it focuses solely on the current week. Visitors will see at most seven days of events, with fewer days displayed as the week progresses. This cycle repeats each week.

The mission of Chicago Music Compass is to answer the question: Which concert should I attend this week?

Chicago offers a diverse range of music events, spanning multiple genres, atmospheres, and price ranges.

Website

Architecture Components

ETLs

The data is extracted primarily from two sources:

- RESTful APIs

- Scrapers

Each source has an ETL process that transforms the external data into the expected format.

A cron job runs all the ETL processes daily, one after the other (in series).

There's a cron that runs all the ETLs daily, one after the other (in series).

ETL

Each ETL process uses similar helper methods to interact with external services.

Once the event is extracted, the script tries to identify the Venue and Artists.

For the Venue, the ETL makes a request to the Google Maps API, passing the name of the venue and a radius. If Google Maps identifies the venue, the process continues; otherwise, the event is discarded. One rule is that all events must have an identifiable venue.

For the Artists, the ETL makes a request to MusicBrainz, an open-source artist library. If the artist is found, the artist is saved in the database. If the artist is not found, the event is still saved but without an identifiable artist.

Additionally, if the artist is found, another request is made to Spotify to get more information about the artist.

Learnings

- Google Maps

const params = {

input: event.venue,

inputtype: "textquery",

key: process.env.NEXT_PUBLIC_GOOGLE_MAPS_API_KEY,

fields: ["place_id", "name", "formatted_address", "geometry"],

locationbias: "circle:[email protected],-87.8967663",

};

await sleep();

const client = new Client({});

const gmapsResponse = await client

.findPlaceFromText({ params })

- MusicBrainz artist search

const { compareTwoStrings } = require("string-similarity");

const isMatch = (valueFromEvent, valueFromMusicBrainz) => {

const result = compareTwoStrings(valueFromEvent, valueFromMusicBrainz);

return result > 0.5;

}

Note: string-similarity is deprecated but still has 1.5M weekly downloads.

- Redis

const client = await redis.connect();

await client.set("token", value, { EX: 60 * 60 });

...

const myQueue = new Queue("livemusic");

Back End

- Django REST Framework

# Model

class Event(models.Model):

name = models.CharField(max_length=240)

...

# Serializer

class EventSerializer(serializers.ModelSerializer):

class Meta:

model = Event

def create(self, validated_data):

...

# View

class EventViewSet(

mixins.CreateModelMixin,

mixins.ListModelMixin,

mixins.RetrieveModelMixin,

generics.GenericAPIView,

):

queryset = Event.objects.all()

serializer_class = EventSerializer

def get(self, request, *args, **kwargs):

if "pk" in kwargs:

return self.retrieve(request, *args, **kwargs)

return self.list(request, *args, **kwargs)

def post(self, request, *args, **kwargs):

return self.create(request, *args, **kwargs)

# URL

path("/events", EventViewSet.as_view())

Static Website

The website is built with Next.js, which has a way to export the site as a static site. This means there is no server, just HTML, CSS, and JS files. These files are uploaded to a static server, and Netlify takes care of this.

When Next.js generates the static version, it pulls the JSON hosted in S3 to build the HTML. The client code also consumes the same JSON file to hydrate the application with the events for the other days of the week.

- Publish

- Opening Site

Reset

A daily job hits the API to get the events for the following seven days, then saves this data into an S3 bucket. This data is later used by Netlify to generate a new version of the web application.

Page Speed

The page is optimized and aims to score high on the Page Speed metrics. To achieve this, the following implementations were made:

- Static Site

By not having a server, the static site is easily hosted on a CDN, making the First Byte Response fast.

- Simplistic Above the Fold Presentation

The content above the fold is very simple. The intention here is to avoid slow paints, improving First Contentful Paint (FCP) and Largest Contentful Paint (LCP).

- Inline Styles

This ensures the HTML comes prepared with styles, reducing jumps due to downloading and processing CSS.

- Lazy load Images

Next.js, by default, lazy loads images that are not in the viewport. This helps to delay network requests and processing time until images are close to the viewport.

Machine Learning

Considering there is information about the artists, such as their social networks, a model was built to use that data to predict their popularity. This value is used on the website to sort the events.

- python

# fetch

dataset = pd.read_csv("./data/artists.csv")

# clean

dataset = cleaned_data(dataset)

# split

train_features, test_features, train_labels, test_labels = get_split_data(dataset)

# train

model = get_model(train_features, train_labels)

# tf.keras.Sequential([normalized_dataset, layers.Dense(units=1)])

# evaluate

model.evaluate(test_features, test_labels)

# predict

model.predict(x)

# save

model.save()

model.export()

- JS

const model = await tf.loadGraphModel('model.json');

const prediction = model.predict(x);

Links

Contributors

-

Jaime Garcia Diaz [Chicago]

-

Alex Romo [San Diego]

-

Octavio Fuentes [Mexico City]